AI in Autonomous Vehicles: How Safe is Self-Driving Technology?

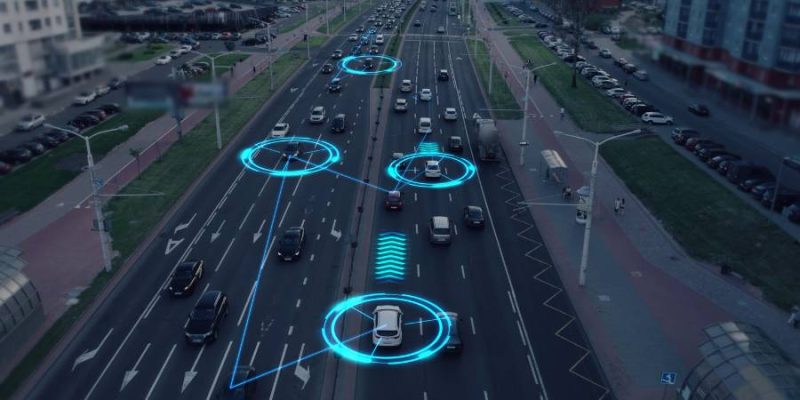

Imagine a world where your car is the designated driver. No more fumbling with maps, no more worrying about road rage, and no more wondering if you should have taken that last exit. This is the promise of autonomous vehicles (AVs) powered by Artificial Intelligence (AI). But as we speed towards this futuristic vision, one question looms large: How safe is self-driving technology really?

The Allure of Autonomous Vehicles

The idea of autonomous vehicles has captivated our imaginations for decades. Hollywood has long teased us with visions of cars that could drive themselves, from the intelligent KITT in Knight Rider to the eerily autonomous cars in I, Robot. Now, thanks to rapid advancements in AI, machine learning, and sensor technology, this science fiction dream is becoming a reality.

Companies like Tesla, Waymo, and Uber are at the forefront of this revolution, pouring billions into research and development. AI enables these vehicles to navigate roads, recognize objects, and make split-second decisions. The potential benefits are enormous: fewer traffic accidents, reduced congestion, lower emissions, and greater mobility for those who can’t drive, such as the elderly or disabled. But before we hand over the keys to machines, we must ask: Are they truly ready for prime time?

Also see: AI in Car Design: Reshaping the Future of Automotive

The Safety Dilemma: Are We Ready to Trust AI with Our Lives?

The allure of convenience is undeniable, but what about safety? According to the World Health Organization, over 1.3 million people die each year in road traffic accidents, with human error being a significant factor. Autonomous vehicles promise to reduce this number dramatically, but only if they work flawlessly.

However, the reality is that self-driving technology is still in its infancy, and the road to perfection is riddled with potholes. There have been several high-profile accidents involving autonomous vehicles, some of which have been fatal. These incidents have sparked a fierce debate about whether we are rushing into a future that we are not yet prepared for.

Consider the case of a self-driving Uber that struck and killed a pedestrian in 2018. The vehicle’s sensors detected the woman, but the AI software decided not to react. This tragedy raises a chilling question: Can we trust AI to make life-and-death decisions?

The Ethical Maze: Who Decides Who Lives or Dies?

AI-driven cars don’t just need to be smart; they need to be ethical. Imagine this scenario: A self-driving car faces an unavoidable crash. It must choose between hitting a pedestrian or swerving into oncoming traffic, potentially harming its passengers. Who should the car protect? The pedestrian or the passengers? Should it make decisions based on the number of lives at stake? Or should it prioritize the safety of its occupants?

These are not just hypothetical questions—they are real ethical dilemmas that engineers and ethicists are grappling with right now. The challenge is to program AI with a moral compass that aligns with societal values. But whose values should the AI follow? And who is responsible when things go wrong?

The Regulatory Quagmire: Who’s Holding the Steering Wheel?

As with any disruptive technology, regulation is lagging behind innovation. Currently, there is no global standard for autonomous vehicle safety. In the United States, regulations vary from state to state, creating a patchwork of rules that manufacturers must navigate. In some areas, self-driving cars are already on the road, while in others, they are banned altogether.

This lack of consistency is a major hurdle for widespread adoption. Without clear regulations, manufacturers are left to police themselves—a situation that has led to concerns about transparency and accountability. Furthermore, as AI evolves, so too must the laws that govern it. But can legislation keep up with the rapid pace of technological advancement?

The Road Ahead: Are We Ready for Widespread Adoption?

So, are self-driving cars ready for widespread adoption? The answer is both yes and no. On one hand, the technology has made incredible strides and is already proving to be safer than human drivers in some scenarios. On the other hand, there are still significant challenges to overcome before we can fully trust AI with our lives.

The truth is, autonomous vehicles are not just about technology—they are about trust. Before we can embrace a world where cars drive themselves, we need to be confident that they can do so safely, ethically, and reliably. This will require not just advances in AI, but also robust regulations, ethical guidelines, and a societal willingness to accept that machines can make decisions that affect human lives.

Conclusion: A Cautious Approach to the Future

The future of transportation is undoubtedly autonomous, but it is a future that must be approached with caution. Self-driving technology holds immense promise, but we must ensure that it is safe, ethical, and well-regulated before we can fully embrace it. Until then, the dream of a world without car accidents, traffic jams, and driving stress will remain just that—a dream.

So, the next time you see a self-driving car glide past you on the road, ask yourself: Are we really ready to let AI take the wheel?

As we stand on the brink of a new era in transportation, it’s time for a serious conversation about safety, ethics, and the future we want to create. Share this post if you believe it’s a discussion worth having.