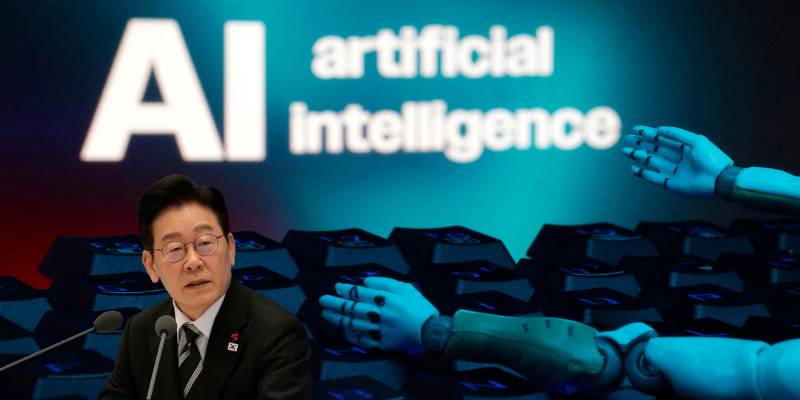

South Korea Just Drew the World’s Sharpest Line on AI – And Startups Are Already Sweating

South Korea on Tuesday became the first country to implement a fully fledged 5G network, a milestone that highlights the intense competitive race by Western powers and their Asian rivals led by China to dominate the next generation of mobile communication technology.

The new rules, which would point toward requirements for other countries to develop their own regulations or risk rule-free imports, are called the AI Basic Act, and they designed to show how powerful A.I. systems can be developed and applied in a way that is controlled – particularly in domain where getting things wrong not only causes inconvenience but real harm.

People are watching the move from well beyond Seoul, and it’s partly because it is a wild bet, but also because it is a risky one, and raises a question that many countries have been twirling around: If A.I. is changing this quickly, can governments afford to keep regulating slowly?

The announcement and early reactions were previously described in reports on launching the law itself, its effect on startups and compliance fears.

This telling of South Korea’s rollout serves as an indication of just how serious the country is about going from free-for-all to licensed-and-monitored industry when it comes to AI.

What sets South Korea apart is the way it so crisply defines its ring around what it deems “high-impact” AI – systems that run in areas such as health care, public infrastructure and finance.

In other words, the places where A.I. is no longer just an answer to a question or the generator of images, but a deciding force in outcomes that have real-world consequences for people’s money, safety and lives.

Under the new system, those systems will require more oversight and in many cases explicit human supervision.

That may sound like a no-brainer, but in practice it’s a radical departure, since the whole point of automation is removing people from the loop. South Korea is basically saying: Whoa, not so fast there.

If an algorithm can determine someone’s future, then someone should be responsible for it.

That expectation – human accountability for machine decisions – is quickly becoming the cutting edge of modern AI politics, and it’s the piece that tech companies often quietly fear.

The law also addresses, straight on, one of the most controversial aspects of the current explosion in AI: synthetic content.

If A.I. creates something that is realistic, people should be made aware of it.” The South Korean law adds some teeth to the idea that generative A.I. outputs should be labeled, in some cases, a policy response to escalating fear about deepfakes, impersonation and A.I.-driven disinformation.

And, well, it’s hard to argue with the inspiration. We are entering an era when the average person can no longer trust that he or she will be able to tell what’s real – not just in a photograph but in audio and video recordings as well.

The policy direction here broadly aligns with a broader international momentum to make AI content more transparent.

The broader context is also evident in how the story has been echoed and talked about beyond Reuters coverage.

This installment of international business coverage explains why global markets are closely following.

Yet while policymakers are describing it as a trust-building step, startups warn that compliance expectations could turn out to be a boat anchor around their ankles.

It’s not the law’s intent that makes early-stage founders nervous – it’s what they know of its operational reality.

And every step of the process – documentation requirements, risk assessments, oversight mechanisms, labeling standards, reporting obligations – takes time, lawyers and process. Big companies can absorb that.

A tiny AI startup operating with a handful of employees and minimal runway? Not always. Cost isn’t the only worry, either. It’s about uncertainty.

When founders can’t easily approximate how the rules will be applied, they often slow down or leave entire domains of product untouched.

And in AI, hesitation is deadly, because the tempo is relentless. This tension between safety and haste has played out in other countries again and again as they try to establish guardrails for A.I., but South Korea is moving more speedily, and decisively than many of them.

This analysis-oriented coverage reflects the feeling that Korea is seeking to regulate at the front end of the curve, rather than on its tail.

What’s really intriguing is that South Korea is not doing this out of fear – it is doing it out of ambition.

The country wants to be a serious global power in AI, not just a consumer of models built elsewhere.

But AI regulation has changed from an issue of governance to one of geopolitical competition.

Governments encourage innovation, but are afraid of falling behind. They want startups, not scandals.

They crave audacious technology - just not the sort that can overnight topple trust.

The South Korean strategy looks like an effort to steer between those competing goals: keep the AI engine humming, but install brakes before someone gets hurt.

It will be a matter of how the law is enforced in practice if it works. If that guardrail is drawn with flexibility and clarity, South Korea may ultimately demonstrate that big AI innovation can coexist with guardrails.

But if enforcement were to become overly strict – and compliance a labyrinth to navigate – you’d risk not getting the AI built there or anywhere, with founders either building elsewhere or steering clear of high-impact domains altogether, relegating the cutting-edge of sensitive AI applications to only the largest players. That would be an ironic result for a law intended to make AI safer for all.

For now, South Korea has made the first move in what seems like a new stage in the AI race: not who can build the biggest model, but who can build the most powerful AI while still keeping public trust.

More countries will follow. Some will copy Korea. Some will argue with it. Others will bide their time and see what breaks loose.

But either way, the world is watching South Korea test out what AI governance looks like when it’s no longer theoretical.

If you’re looking for a local policy lens, this Korea-focused explainer provides a helpful sense of how these rules are being differently framed at home.