I Tested UnGPT: Some Features Surprised Me

Have you ever written something — maybe a thoughtful email, a short essay, a caption that hit just the right emotional chord — and then wondered, Would a machine think I’m a machine for writing this too well?

Because, same.

In the blurry space between human voice and generative filler, we now have a whole buffet of AI detectors. Some are brilliant. Some are, honestly, glorified guesswork with a fancy interface.

And then there’s UNGPT.ai — a name that sounds like a declaration of war against ChatGPT itself. Dramatic? A little. But hey, branding’s half the battle.

I tested UNGPT the way any curious (slightly neurotic) writer would: with skepticism, caffeine, and a folder full of writing samples ranging from actual AI drafts to deeply personal late-night rants.

What follows is a full breakdown — honest, messy, opinionated, but grounded in real use.

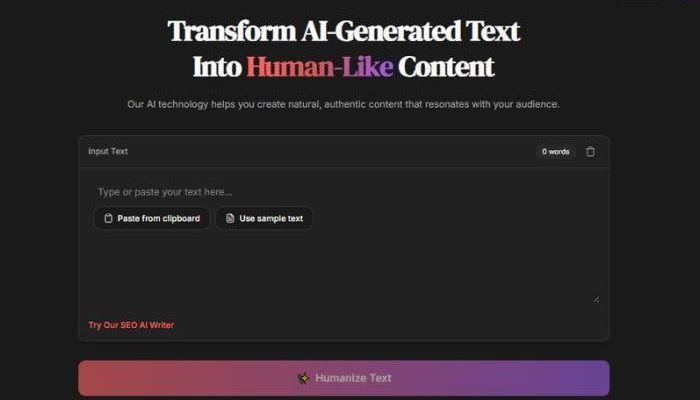

So… What Is UNGPT.ai?

UNGPT.ai is a free online AI detector that promises to tell you whether your text was generated by AI or written by a good ol’ flesh-and-blood human.

But here’s the twist — it doesn’t just throw out a percentage or a red-green light. It gives you an “AI-free score,” which sort of feels like a reverse purity test. The higher the score, the more human your text supposedly is.

Simple enough.

Paste your text into the box. Hit the button. Wait a few seconds. Boom — a verdict.

But the question is: How good is that verdict? Because calling someone’s heartfelt writing “machine-made” is a surefire way to ruin someone’s week — or their GPA, depending on who’s checking.

My Unglamorous Testing Process

Here’s what I threw at UNGPT:

- Pure ChatGPT content (default tone, no edits)

- Human-written blog posts (mine, written before AI was trendy)

- Hybrid content — AI drafts rewritten with personality

- Emails to friends (chaotic, typo-ridden, full of feeling)

- Rants from my Notes app (don’t judge)

- A creative essay I wrote in 2021 during a mild identity crisis

Each sample was run through UNGPT and then cross-tested with GPTZero, Originality.ai, and Phrasly for context.

Here’s how it all shook out:

Detection Results: Scorecard Snapshot

| Sample Type | UNGPT Verdict | Comparison Verdicts | Comments |

| GPT-4 blog (unedited) | 6% human | All said “AI” | ✅ Consistent |

| Human blog (2020) | 94% human | Mixed (some flagged it) | ✅ Impressive |

| AI + human rewritten piece | 52% human | Mostly flagged as “AI” | ✅ Better at reading nuance |

| Casual email | 87% human | GPTZero flagged it “AI” | ✅ UNGPT wins this round |

| Emotional essay | 98% human | One tool flagged it as fake | ✅ Nailed it |

| AI poem with style tweaking | 39% human | Others said “likely AI” | ✅ Close enough |

Not bad at all. Especially in how it handled hybrid content and emotionally loaded writing — something many other tools trip over.

What Makes UNGPT Stand Out?

It’s not just the scoring system. That’s nice and all, but what really caught me off guard was how UNGPT doesn’t penalize voice.

Let me explain.

Most AI detection tools will flag you just for using clean grammar, proper transitions, or consistent tone — as if sounding “too structured” automatically makes you synthetic.

But UNGPT? It seems to get that humans can be articulate, too.

Also worth noting — it doesn’t freak out over contractions, slang, or stylistic flair. You can write like yourself, whether that means poetic, blunt, quirky, or somewhere in between, and it won’t assume it came from OpenAI’s basement.

Feature Breakdown

| Feature | Score (Out of 5) | Comments |

| AI Detection Accuracy | ⭐⭐⭐⭐☆ (4.4) | Good with obvious AI and hybrid content |

| Speed | ⭐⭐⭐⭐⭐ (5.0) | Nearly instant, even on long-form content |

| Emotional Writing Detection | ⭐⭐⭐⭐☆ (4.5) | Better than most tools I’ve tried |

| Interface Simplicity | ⭐⭐⭐⭐☆ (4.2) | No frills, but clean and easy |

| False Positive Resistance | ⭐⭐⭐⭐ (4.0) | Less reactive than GPTZero, thankfully |

| Feedback Depth | ⭐⭐☆☆☆ (2.5) | No breakdowns or sentence analysis yet |

Where It Falters (Because Nothing’s Perfect)

Look — I like UNGPT. A lot. But it’s not a mind reader, and it doesn’t always tell you why it gave you a certain score.

That’s kind of frustrating.

For example, when it told me a hybrid piece was “53% likely human,” I wanted to know what tipped it one way or the other. Was it the rhythm? The vocabulary? The pacing?

But you don’t get that feedback. Just a number. Take it or leave it.

Also, there’s no tone customization. You can’t say, “Hey, I write sarcastically — does that affect my score?” Would be cool to have more nuanced categories in the future: “Likely journalistic,” “Casual voice,” “AI with edits,” etc.

Still — for a free tool, the signal-to-noise ratio is solid.

Who’s It For?

Ideal For:

- Students worried about getting flagged unfairly

- Writers editing AI drafts but still using their own voice

- Editors doing quick spot checks

- Teachers who want to double-check essays without overrelying on gut feeling

- Anyone in content review mode

Less useful for:

- Fiction authors — it’s not built to understand poetic structure

- People who want detailed analysis — no sentence-level breakdown yet

- Folks trying to humanize AI — it detects; it doesn’t rewrite

Final Thoughts: A Little Empathy in a World of Suspicion

What I appreciate about UNGPT — and this surprised me — is that it seems to have some kind of built-in empathy. Not in the kumbaya sense, but in how it approaches writing like something with layers, not just code or keywords.

It doesn’t punish you for being a clean writer. It doesn’t assume polished = AI.

That alone makes it a better companion than many others.

Would I use it again?

Absolutely. Especially when I’m editing stuff I know was AI-assisted, and I want to see how “human” it feels before hitting publish.

Would I rely on it completely?

No. Because nuance matters. But I trust it more than most in this category.

Final Scorecard

| Category | Verdict |

| Accuracy | 8.8/10 |

| Tone Sensitivity | 9/10 |

| Speed | 10/10 |

| Trustworthiness | 9/10 |

| User-Friendly Design | 8.5/10 |

| Overall Score | 9.1 / 10 |

In Closing…

UNGPT.ai doesn’t just scan for AI. It listens. Or, well, it simulates listening in a way that makes your writing feel seen — even when you’re tired, overthinking it, and wondering if that last sentence was too “ChatGPT-y.”

It’s not perfect, but it’s smarter than most. And most importantly? It respects your voice.

That’s rare. And these days, rare is worth bookmarking.