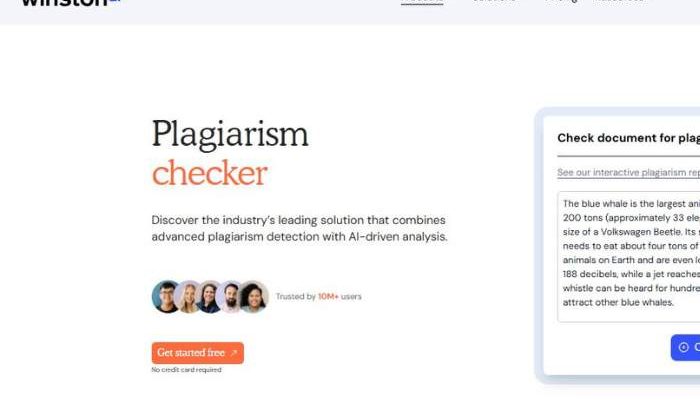

Winston AI Plagiarism Checker: My Unfiltered Thoughts

Winston’s plagiarism checker is a tool designed to help writers, educators, SEO folks etc., ensure that text is original — no uncredited copying, no surprise matches.

It also comes bundled with an AI-detector (to see if the text was possibly generated by ChatGPT or other large language models) so you get a dual check: “plagiarism + possible AI authorship.”

Main claims:

- It scans the web, documents, databases to find duplicate content. Winston says it compares your text against 400 billion webpages, documents and online databases.

- Supports many languages: over 180 languages for plagiarism detection.

- Interactive reports: highlights where content is duplicated, links to sources, lets you manage citations, possibly filter or adjust sources, share reports (link or PDF)

- Confidentiality / security: your content is encrypted; you can (apparently) delete scanned documents; they do not use your content to train their model.

Also there’s a “Plagiarism Checker API” for developers, so it’s not just the regular UI.

Have a closer look at Winston AI Plagiarism Checker

What It Does Well (Pros)

From what I see, these are strong points — things that make me think this tool could be quite helpful.

| Strength | Why It’s Valuable |

| Large Coverage | 400 billion sources is huge. More sources = better chance of catching duplicate content. |

| Multilingual Support | If you write or check content in multiple languages, this is a big plus. Less risk of “Oh, this looks different because it’s in Spanish / French / etc.” slipping through. |

| Citation Management & Source Highlighting | Makes the process of fixing or attributing easier. Helps you see exactly which parts are too similar to something else. |

| Interactive, Shareable Reports | Good for collaboration, for clients, for educational settings. Instead of just “pass/fail” you get detail. |

| Security & Privacy | It’s important, especially in academic or proprietary content. Knowing you can delete your data, that it’s encrypted, that it isn’t used to train the model — these reduce the risk. |

What It Might Not Handle Perfectly (Limitations / “Watch-Outs”)

As much as I like it, there are some trade-offs, and I think you should know them.

- False positives / similarity vs plagiarism: Common phrases, generic sentences, or common expressions may get flagged even though they’re not copying in a problematic way. You’ll need to review the highlighted bits.

- Context / nuance: It might flag paraphrased/original-voice content if it shares structure or key phrases with existing sources. Not all matches are bad; some might be inevitable (“the best way to …”, “in summary …”, etc.).

- Cost / plan restrictions: Some parts of the functionality (e.g. full access / better scan depth / more thorough reports) likely behind paywalls. From reviews, “free trial / limited use” is common.

- Time / length constraints: Very long documents may take more time; sometimes chapter-length stuff or books may run into usage limits. Also, OCR (scanning images of text) adds complexity.

- Dependency on web and database coverage: If something is unpublished, behind paywalls, or not well indexed, the checker might not “see” it. So originality isn’t guaranteed just because nothing was flagged.

Try Winston AI Plagiarism Checker

My Take: Where It Fits Best

If I were you, here’s when I’d love having Winston AI’s plagiarism checker in my toolkit:

- Before publishing blog posts or articles, just to be safe. You catch duplicate content or unintended matches early, before real problems (SEO penalties, copyright issues, trust loss) arise.

- In education: for teachers checking student essays; for students wanting to be sure their work is clean. It gives detail, so it’s not just “you plagiarized” but “this sentence matches this source.”

- For SEO / content agencies: you have multiple writers, content submitted by freelancers, you want integrity, want to avoid duplicate content campaigns. Sharing reports, deleting documents, verifying originality are helpful.

It might frustrate you if you’re writing in a more creative style, with a lot of metaphor, idiom, or rephrasing — sometimes you’ll be tweaking things that aren’t really “plagiarism” but just sound similar. If you over-correct, you might lose sparkle.

How to Use It Well (so you get full benefit)

Here are tips so you don’t waste time or get misled:

- Run your draft through the checker before finalizing. If you wait till after all polishing, fixing duplicates / problems might imply big rewrites; earlier is easier.

- When the report gives flagged sections, inspect why they were flagged: is it very common phrasing? Is it coincidence? If yes, maybe leave it or tweak lightly.

- Keep backups of the original so you can compare (“this was my wording” vs “tweaked wording”) — helps keep your voice intact.

- Use citation management: if something flagged is a quote (intended), add citation instead of changing it to avoid acknowledging someone else.

- Use free trial / limited usage first. Test it with your kind of writing (blogs? academic? creative?) to see how “sensitive” it is. If it works well, then pay.

- In multilingual work, test in all languages you’ll use — see how well detection works in non-English contexts, whether false flags jump up.

Emotional / Practical Considerations

Writing is more than putting words together. It’s about reputation, trust, owning your voice. Getting flagged for plagiarism (even accidentally) hurts — it can undermine confidence, damage SEO, or worse, credibility.

Winston AI, from what I see, offers comfort in that risk zone. It’s like having a guardrail: you can push the creative boundaries, but with fewer missteps.

Sometimes though, tools can make you second-guess yourself too much. That voice of “Will this be flagged?” can creep in, and then you might lean safe rather than interesting. That balance is personal: use the tool to guide, not to dictate.

My Verdict: Is It Worth Trying?

Short answer: Yes. I think it’s definitely worth giving it a go. Especially since they offer free or trial versions so you can test without commitment. The value is high if you care about originality and content integrity.

If I were you, I’d try it now. Feed in something you already wrote, see the report. See how many flags, whether the flagged ones are meaningful or annoying. If the tool’s “noise level” is low (false positives manageable) and the report is helpful, then it has a place in your writing workflow.