Machine Learning: A Beginner’s Guide

In our daily lives, we are surrounded by human beings who possess the remarkable ability to learn from their experiences. In contrast, machines or computers function based on the instructions provided to them. But what if machines could also learn from experiences and past data like humans? This is where Machine Learning (ML) comes into the picture.

What is Machine Learning

ML is a subset of Artificial Intelligence (AI) that primarily deals with the development of algorithms, allowing computers to learn from new data, and past experiences independently. As the learning process progresses and the number of available samples increases, the performance of ML algorithms improves adaptively.

Table of contents:

- How Does Machine Learning Work?

- Features of Machine Learning

- Machine Learning Types

- 5 Uses of Machine Learning

- How Companies are using Machine Learning

- History of Machine Learning

- Challenges of Implementing Machine Learning

For instance, Deep Learning is a subfield of ML that trains computers to mimic natural human traits such as learning from examples, and it offers better performance parameters than conventional ML algorithms.

Defined back in 1959

Arthur Samuel introduced the term “Machine Learning” in 1959. In summary, Machine Learning enables machines to learn from data automatically, improve their performance based on experiences, and make predictions without explicit programming.

Machine Learning Today

In today’s world, the rapid growth of big data, the Internet of Things (IoT), and ubiquitous computing has made machine learning a crucial tool for problem-solving across a wide range of areas. Some of the key areas where machine learning is now being utilized are:

- Computational finance (credit scoring, algorithmic trading)

- Computer vision (facial recognition, motion tracking, object detection)

- Computational biology (DNA sequencing, brain tumor detection, drug discovery)

- Automotive, aerospace, and manufacturing (predictive maintenance)

- Natural language processing (voice recognition)

By using historical data, which is referred to as training data, machine learning algorithms can create complex mathematical calculations and models that can predict outcomes or make decisions without being explicitly programmed.

Machine learning merges computer science and statistics to build predictive models that can learn from data. The more data provided, the better the performance of the algorithm.

The ability of a machine to learn is dependent on its capacity to enhance its performance by gaining access to more data. This means that machine learning has the potential to revolutionize many industries by providing more accurate predictions and better decision-making capabilities.

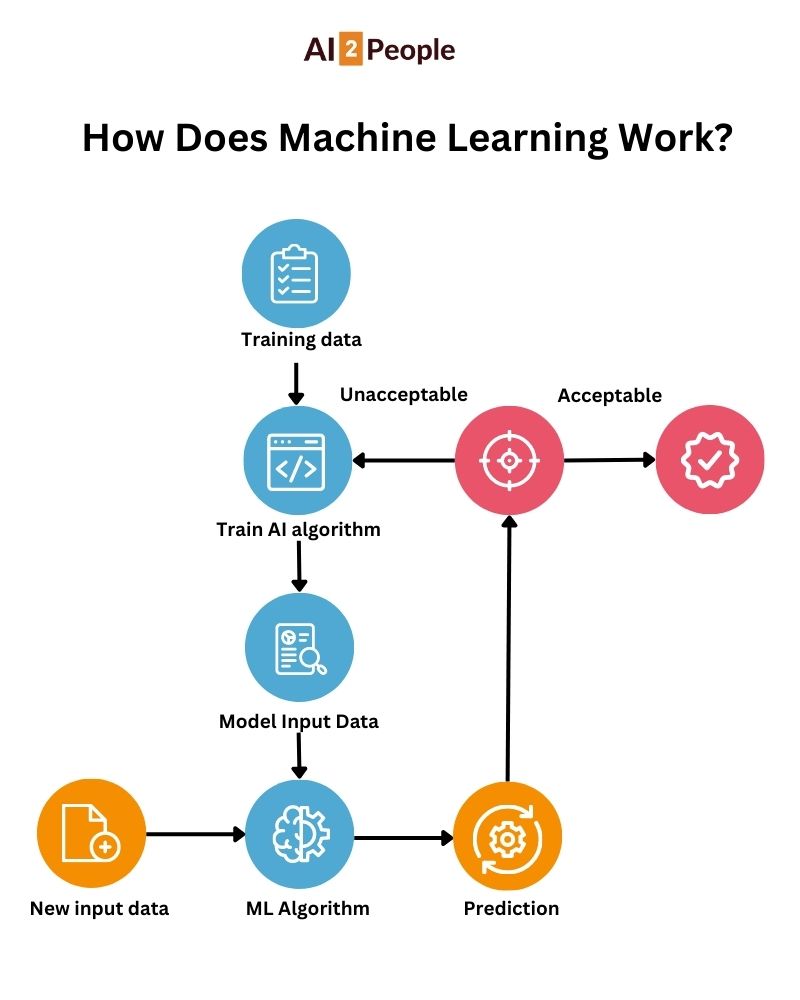

How Does Machine Learning Work?

Machine learning allows computers to learn from historical data, identify patterns, and make accurate predictions based on the data received. The accuracy of the predictions made by a machine learning system is directly proportional to the amount and quality of the data it has access to. This means that the more data a system has, the more accurately it can predict outcomes.

When faced with a complex problem that requires predictions, we no longer need to write code from scratch. Instead, we can feed the relevant data to generic algorithms and let the machine build the logic and predict the output based on the input data. This revolutionary approach has transformed the way we approach problem-solving.

The following block diagram illustrates the workings of a typical machine learning algorithm:

Features of Machine Learning:

- Machine learning uses data to detect patterns in a given dataset.

- It learns from previous data and improves automatically.

- It is a data-driven technology highly dependent on the quality of data input.

- Machine learning is very similar to data mining as it deals with the huge amount of the data.

Types of Machine Learning

One crucial aspect of machine learning is classification, which can be broadly divided into four types:

- Supervised learning,

- Unsupervised learning,

- Semi-supervised learning, and

- Reinforcement learning.

Supervised Machine Learning

Supervised machine learning involves training machines using labeled datasets, allowing them to predict outputs based on the provided training. In supervised learning, the input and output parameters are already mapped, and the machine is trained with the input and corresponding output. The primary objective is to map the input variable with the output variable. Supervised learning is further classified into two categories: classification and regression.

- Classification algorithms deal with classification problems where the output variable is categorical, such as yes or no, true or false, male or female, etc. Real-world applications of classification include spam detection and email filtering. Examples of classification algorithms include the Random Forest Algorithm, Decision Tree Algorithm, Logistic Regression Algorithm, and Support Vector Machine Algorithm.

- Regression algorithms handle regression problems where input and output variables have a linear relationship. These are known to predict continuous output variables. Examples include weather prediction, market trend analysis, etc. Popular regression algorithms include the Simple Linear Regression Algorithm, Multivariate Regression Algorithm, Decision Tree Algorithm, and Lasso Regression.

Unsupervised Machine Learning

Unsupervised machine learning model is a learning technique that does not require supervision. Machines are trained using an unlabeled dataset and are enabled to predict the output without supervision. An unsupervised learning algorithm aims to group the unsorted dataset based on input similarities, differences, and patterns. Unsupervised machine learning is classified into clustering and association.

- Clustering technique refers to grouping objects into clusters based on parameters such as similarities or differences between objects. For example, grouping customers by the products they purchase. Some known clustering algorithms include the K-Means Clustering Algorithm, Mean-Shift Algorithm, DBSCAN Algorithm, Principal Component Analysis, and Independent Component Analysis.

- Association learning refers to identifying typical relations between the variables of a large dataset. It determines the dependency of various data items and maps associated variables. Typical applications include web usage mining and market data analysis. Popular algorithms obeying association rules include the Apriori Algorithm, Eclat Algorithm, and FP-Growth Algorithm.

Semi-Supervised Machine Learning

Semi-supervised learning combines characteristics of both supervised and the unsupervised machine learning algorithms. It uses a combination of labeled and unlabeled datasets to train its algorithms, overcoming the drawbacks of the options mentioned above.

For example, consider a college student learning a concept. A student learning a concept under a teacher’s supervision is supervised learning. In unsupervised learning, a student self-learns the same concept at home without a teacher’s guidance. Meanwhile, a student revising the concept after learning under the direction of a teacher in college is a semi-supervised form of learning.

Reinforcement Learning

Reinforcement learning is a feedback-based process where the AI component automatically takes stock of its surroundings, takes action, learns from experiences, and improves performance. Reinforcement learning is applied across different fields such as game theory, information theory, and multi-agent systems. Reinforcement learning is further divided into positive and negative reinforcement learning.

Positive reinforcement learning refers to adding a reinforcing stimulus after a specific behavior of the agent, making it more likely that the behavior may occur again in the future, such as adding a reward after a behavior. Negative reinforcement learning refers to strengthening a specific behavior that avoids a negative outcome.

Supervised vs Unsupervised Machine Learning

For example, a supervised learning model can predict flight times based on peak hours at an airport, traffic congestion in the air, and weather conditions (among other possible parameters). However, humans must label the dataset to train the model on how these factors can affect flight timings. A supervised model depends on knowing the outcome to conclude that snow is a factor in flight delays.

On the other hand, unsupervised learning models are constantly working without human interference. They find and arrive at a structure of sorts using unlabeled data. The only human help needed here is for the validation of output variables.

For example, when someone shops for a new laptop online, an unsupervised learning model will figure out that the person belongs to a group of buyers who buy a set of related products together. However, it is the job of a serious data scientist or analyst to validate that a recommendation engine offers options for a laptop bag, a screen guard, and a car charger.

Typical Machine Learning Algorithms Explained

Machine learning (ML) algorithms are widely used in various fields. Here are some commonly used ML algorithms:

- Neural Networks: Neural networks imitate the working of the human brain, consisting of a vast number of connected processing nodes. Neural networks excel at pattern recognition, and they play a critical role in several applications such as natural language translation, image recognition, speech recognition, and image creation.

- Linear Regression: This algorithm predicts numerical values, based on the linear relationship between different values. For instance, linear regression can be utilized to anticipate house prices based on historical data of the region.

- Logistic Regression: This supervised learning algorithm makes predictions for categorical response variables such as “yes/no” responses to queries. Logistic regression can be used for applications such as categorizing spam and ensuring quality control on a production line.

- Clustering: Using unsupervised learning, clustering algorithms can identify patterns in data and group them together. Data scientists can leverage computers to identify distinctions between data items that humans may overlook.

- Decision Trees: Decision trees can be employed to predict numerical values (regression) and classify data into categories. Decision trees employ a branching sequence of linked decisions that can be represented with a tree diagram. One of the advantages of decision trees is that they are easy to validate and audit, unlike the black box of neural networks.

- Random Forests: In a random forest, the ML algorithm predicts a value or category by combining the results from several decision trees.

Top 5 Uses of Machine Learning: How It’s Transforming Various Industries

It’s no surprise that machine learning is quickly becoming one of the most popular technologies in various industries. Examples are countless. Let’s take a closer look at the top 5 applications of machine learning and how it’s transforming the finance, marketing, healthcare, retail, and blockchain sectors.

Finance Sector: Fighting Fraud and Enhancing Investments

The finance sector is increasingly leveraging machine learning technology to combat fraudulent activities and make better investment decisions. By using ML-derived insights, financial institutions can identify potential investment opportunities and decide when to trade. Additionally, data mining methods help cyber-surveillance systems detect warning signs of fraudulent activities and take the necessary steps to neutralize them.

Some of the popular examples of financial institutions that have already partnered with tech companies to leverage the benefits of machine learning include Citibank, which has partnered with fraud detection company Feedzai to handle online and in-person banking fraud, and PayPal, which uses several machine learning tools to differentiate between legitimate and fraudulent transactions between buyers and sellers.

See more: AI in investing: The Ultimate Guide for Beginners

Marketing: Tailoring Customer Experiences and Boosting ROI

In the marketing industry, artificial intelligence (AI) marketing is used to make automated decisions based on data collection, data analysis, and additional observations of audience or economic trends that may impact marketing efforts.

AI marketing tools use data and customer profiles to learn how to best communicate with customers and then serve them tailored messages at the right time without intervention from marketing team members, ensuring maximum efficiency.

Some of the popular AI marketing use cases include data analysis, natural language processing (NLP), media buying, automated decision-making, content generation, and real-time personalization. AI-powered marketing tools help businesses make data-driven decisions and optimize their marketing strategy to maximize return on investment (ROI).

Healthcare: Revolutionizing Patient Diagnoses and Treatment

The healthcare industry is leveraging machine learning technology to improve patient diagnoses and treatment. Wearable devices and sensors, such as wearable fitness trackers and smart health watches, monitor users’ health data to assess their health in real-time.

Additionally, machine learning algorithms allow medical experts to predict the lifespan of a patient suffering from a fatal disease with increasing accuracy.

Machine learning is also contributing significantly to drug discovery and personalized treatment. Machine learning helps speed up the process of discovering or manufacturing a new drug, and ML technology looks for patients’ response markers by analyzing individual genes, providing targeted therapies to patients.

Companies like Genentech have collaborated with GNS Healthcare to leverage machine learning and simulation AI platforms to innovate biomedical treatments and address healthcare issues.

Retail Sector: Personalizing Customer Shopping Experience

Retail websites use machine learning methods to capture data, analyze it, and deliver personalized shopping experiences to their customers. Machine learning techniques are also used for marketing campaigns, customer insights, customer merchandise planning, and price optimization.

The global recommendation engine market is expected to reach a valuation of $17.30 billion by 2028, according to a September 2021 report by Grand View Research, Inc.

Some of the popular examples of recommendation systems include Amazon, which uses artificial neural networks (ANN) to offer intelligent, personalized recommendations relevant to customers based on their recent purchase history, comments, bookmarks, and other online activities.

Netflix and YouTube rely heavily on recommendation systems to suggest shows and videos to their users based on their viewing history. Additionally, virtual assistants or conversational chatbots powered by machine learning, natural language processing (NLP), and natural language understanding (NLU) are also used to automate customer shopping experiences.

Blockchain: The New Trend in AI

Blockchain, the revolutionary technology that underlies cryptocurrencies like Bitcoin, has become increasingly valuable to a wide range of businesses. By utilizing a decentralized ledger to record transactions, this technology promotes transparency and accountability between all parties involved in a transaction, eliminating the need for intermediaries.

See more: AI and Blockchain: Revolutionizing the Future

Furthermore, once a transaction is recorded on the blockchain, it cannot be deleted or altered, providing an unprecedented level of security and immutability.

In the near future, blockchain is expected to merge with AI and other cutting-edge technologies such as machine learning and natural language processing. This is because certain features of blockchain, such as its decentralized ledger, transparency, and immutability, complement the capabilities of these other technologies.

For example, major banks like Barclays and HSBC are working on blockchain-powered projects that offer interest-free loans to their customers. By using machine learning algorithms to analyze customer spending patterns, these banks can accurately assess a borrower’s creditworthiness and make informed lending decisions.

How Companies are Leveraging Machine Learning for Business Success

Machine learning has become a vital component of some companies’ business models, such as Netflix’s suggestions algorithm or Google’s search engine. However, even companies not primarily focused on machine learning are integrating it into their operations.

A recent survey indicates that 67% of companies are already using machine learning in some capacity.

While some companies are still trying to figure out how to leverage machine learning to their advantage, others are already using it in several ways. To determine if a task is suitable for machine learning, researchers from the MIT Initiative on the Digital Economy developed a 21-question rubric in a 2018 paper.

The researchers discovered that no occupation will be untouched by machine learning, but no occupation is likely to be completely taken over by it. The key to unlocking machine learning’s potential is to reorganize jobs into discrete tasks, some of which can be done by machine learning and others that require human intervention.

Here are a few ways companies are already using machine learning to their advantage:

- Recommendation Algorithms: Machine learning powers recommendation engines behind Netflix, YouTube, Facebook, and product recommendations. By learning our preferences, the algorithms can curate personalized content that appeals to us. On Twitter, the algorithm selects the tweets it believes will interest us, and on Facebook, it determines what ads to display, what posts to share, and what content we’ll find appealing.

- Image Analysis and Object Detection: Machine learning can analyze images to identify people and differentiate between them, although facial recognition algorithms are a source of controversy. Hedge funds famously use machine learning to analyze parking lot occupancy to help them make informed bets on company performance.

- Fraud Detection Machines use machine learning to analyze patterns, such as how an individual typically spends or where they shop, to detect fraudulent credit card transactions, log-in attempts, and spam emails.

- Automatic Helplines or Chatbots: Many companies have deployed online chatbots that allow customers or clients to interact with machines rather than humans. These algorithms use machine learning and natural language processing to learn from past conversations and provide appropriate responses. Chatbots like ChatGPT are also used for various tasks by companies to automate repetitive tasks.

- Self-Driving Cars: Self-driving cars rely heavily on machine learning, particularly deep learning, for their technology. Although controversial, AI in cars is slowly taking over and helping to improve safety.

- Medical Imaging and Diagnostics: Machine learning programs can examine medical images and other information to identify markers of illness, such as predicting cancer risk based on a mammogram.

All in all, machine learning is transforming how businesses operate and remain competitive. By reorganizing jobs into discrete tasks, companies can leverage machine learning to streamline operations, detect fraud, and enhance customer service. As machine learning continues to evolve, its potential applications for businesses are limitless.

History of Machine Learning

Machine learning is no longer science fiction. It has become an integral part of our daily lives, from virtual assistants like Amazon’s Alexa to self-driving cars. The concept of machine learning, however, has a long and fascinating history. Let’s take a look at some of the milestones in the evolution of machine learning.

Pre-1940: The Early History of Machine Learning

- In 1834, Charles Babbage, the father of the computer, envisioned a device that could be programmed with punch cards. While the machine was never built, its logical structure influenced the design of modern computers. In 1936, Alan Turing proposed a theory for how a machine could execute a set of instructions.

The Era of Stored Program Computers

- In 1940, the first manually operated computer called “ENIAC” was invented, which was the first electronic general-purpose computer. This led to the development of stored program computers such as EDSAC in 1949 and EDVAC in 1951.

- In 1943, a human neural network was modeled with an electrical circuit. In 1950, the scientists started applying their idea to work and analyzed how human neurons might work.

Computer Machinery and Intelligence.

- In 1950, Alan Turing published a seminal paper titled “Computer Machinery and Intelligence,” which examined the topic of artificial intelligence. He asked the question, “Can machines think?”

Machine Intelligence in Games

Arthur Samuel, a pioneer of machine learning, created a program in 1952 that helped an IBM computer to play checkers. The program became better the more it played. In 1959, Samuel coined the term “Machine Learning.”

The First “AI” Winter

Between 1974 and 1980, AI and machine learning researchers experienced a tough time, known as the “AI winter.” Machine translation failed, and interest in AI reduced, resulting in reduced government funding for research.

Machine Learning from Theory to Reality

- In 1959, the first neural network was applied to a real-world problem to remove echoes over phone lines using an adaptive filter.

- In 1985, Terry Sejnowski and Charles Rosenberg invented a neural network called NETtalk, which was able to teach itself how to pronounce 20,000 words correctly in one week.

- In 1997, IBM’s Deep Blue intelligent computer defeated chess expert Garry Kasparov, becoming the first computer to beat a human chess expert.

Machine Learning in the 21st Century.

- In 2006, computer scientist Geoffrey Hinton named neural net research “deep learning,” which has become one of the most trending technologies.

- In 2012, Google created a deep neural network that could recognize humans and cats in YouTube videos. In 2014, Chabot “Eugen Goostman” became the first chatbot to convince 33% of human judges that it was not a machine.

- Facebook’s DeepFace, created in 2014, claimed to recognize a person with the same precision as a human.

- In 2016, AlphaGo defeated world champion Lee Sedol at the game of Go, and in 2017, it defeated the game’s top player, Ke Jie. In 2017, Alphabet’s Jigsaw team built an intelligent system that could learn online trolling by reading millions of comments on various websites.

Machine Learning at Present

Machine learning has made significant advancements in research, and it is present everywhere around us. Modern machine learning models can be used to make various predictions, such as weather prediction, disease prediction, stock market analysis, etc. The most traditional machine learning technology includes supervised, unsupervised, and reinforcement learning with clustering, classification, decision tree, SVM algorithms, and more.

Challenges of Implementing Machine Learning in Businesses

As machine learning technology continues to evolve, it’s no surprise that it has become an integral part of our lives, making many of our daily tasks easier and more convenient.

However, as more and more businesses implement machine learning into their operations, a number of ethical concerns have arisen about the impact of AI technologies on our society.

Let’s take a closer look at some of the challenges associated with machine learning implementation and how these challenges can be addressed.

Challenge 1: Technological Singularity

The concept of technological singularity, which refers to the point where artificial intelligence surpasses human intelligence, has been a topic of discussion for many years. Although researchers are not overly concerned about this happening in the near future, the prospect of superintelligence raises many ethical questions, especially with regards to the use of autonomous systems such as self-driving cars.

While it’s impossible to predict if or when a driverless car would have an accident, the question of responsibility and liability is still up for debate. Should we continue to develop fully autonomous vehicles, or limit them to semi-autonomous ones to ensure human involvement in the driving process? These are the types of ethical debates that are happening as new AI technologies continue to emerge.

Challenge 2: AI Impact on Jobs

One of the most significant concerns regarding the implementation of artificial intelligence is its impact on jobs. While there is a perception that AI will lead to job losses, the market demand for specific job roles will likely shift instead.

See more: How Artificial Intelligence is Impacting Jobs and Careers

As with any new, disruptive technology, industries and businesses will need to adapt to keep up with changing demands. For example, as more manufacturers shift their focus to electric vehicle production, the demand for jobs in this sector will likely increase.

Similarly, while some jobs may become redundant due to automation, there will still be a need for individuals to help manage AI systems and address more complex problems within affected industries such as customer service. The biggest challenge will be helping people transition to new roles that are in demand.

Challenge 3: Privacy Concerns

Privacy concerns are a key issue when it comes to data privacy, data protection, and data security. With recent legislation like GDPR and the CCPA, individuals have more control over their personal data. However, incorporating AI technology into businesses can create new vulnerabilities and opportunities for surveillance, hacking, and cyberattacks. As a result, investments in security have become a top priority for companies to mitigate these risks.

Challenge 4: Bias and Discrimination

Bias and discrimination are some of the most pressing ethical questions raised by the use of machine learning. Even with good intentions, AI systems can be biased due to the data they are trained on, which may be generated by biased human processes.

Instances of bias and discrimination in applications like facial recognition software and social media algorithms have led to calls for greater accountability and oversight. As businesses become more aware of these risks, they have become more active in the discussion around AI ethics and values.

Challenge 5: Accountability

The lack of significant legislation to regulate AI practices means that there is no real enforcement mechanism to ensure ethical AI is practiced.

Ethical frameworks have emerged to guide the construction and distribution of AI models within society, but the combination of distributed responsibility and a lack of foresight into potential consequences are not conducive to preventing harm to society. As a result, companies need to be proactive in establishing ethical guidelines and frameworks for AI development and use.

Final Words

Machine learning has revolutionized the way businesses make decisions by providing them with accurate outputs, memorization, and learning capabilities. This technology has become crucial in streamlining business operations in various industries such as manufacturing, retail, healthcare, energy, and financial services. With data-driven decisions, companies can optimize their current operations while searching for new methods to ease their overall workload.

As computer algorithms continue to become more intelligent, we can expect an upward trend of machine learning this year and beyond. It is evident that machine learning is a game-changer for the business world, and companies that embrace this technology will stay ahead of their competitors.